| KPI | Description | L1: InitialAd-hoc | L2: ManagedManaged by humans | L3: DefinedExecuted by tools | L4: Quantitatively managedEnforced by tools | L5: OptimizingOptimized by tools | |

| Lead time for changes | adapted to ECU project:Product delivery lead time as the time it takes to go from code committed to code successfully running in release customers (carline, BROP orga) | One month or longer | Between one week and one month | Between one day and one week | less than one day | less than one hour | |

|---|

| Deployment frequency | adapted to ECU project:The frequency of code deployment to internal customers (carline, BROP orga). This can include bug fixes, improved capabilities and new features. | Fewer than once every six months | Between once per month and once every six months | Between once per week and once per month | Between once per day and once per week | On demand, once per day or more often | |

|---|

| Mean Time to Restore (MTTR) | How quickly can a formerly working feature/capability be restored?(not: How quickly can a bug be fixed!) | More than one month | Less than one month | Less than one week | Less than one day | Less than one hour | |

|---|

| Change Fail Percentage | Applicable ? | | | | | | |

|---|

| Pipeline knowledge bus factor | How many people are able to fix broken pipelines within reasonable time and/or add new pipeline features with significant complexity (doesnt include rocket science)? | ≤ 1 | 2 | 3 | 3 + people in various teams | at least one in most project teams | |

|---|

| DevOps | Are pipelines managed by the software developers or not? | Pipelines are (almost) exclusively managed by dev-team-external staff | Pipelines are maintained by the developers; new capabilities are added by externals | Pipelines are maintained and improved by the developers in almost all cases | Pipelines are maintained and improved by the developers in all cases | | |

|---|

| Accessibility | Can all contributors, also from suppliers, access whatever they need for their jobs? | Developers can access only fragments of the system, and additional access is hardly possible (only with escalation/new paperwork) | Developers can get access to most parts of the system, and for the other parts there is some replacement (libraries) | Developers dont have full access, however they can trigger a system build with their changes and get full results | Developers have access to the full project SW and are able to do a full system build. | | |

|---|

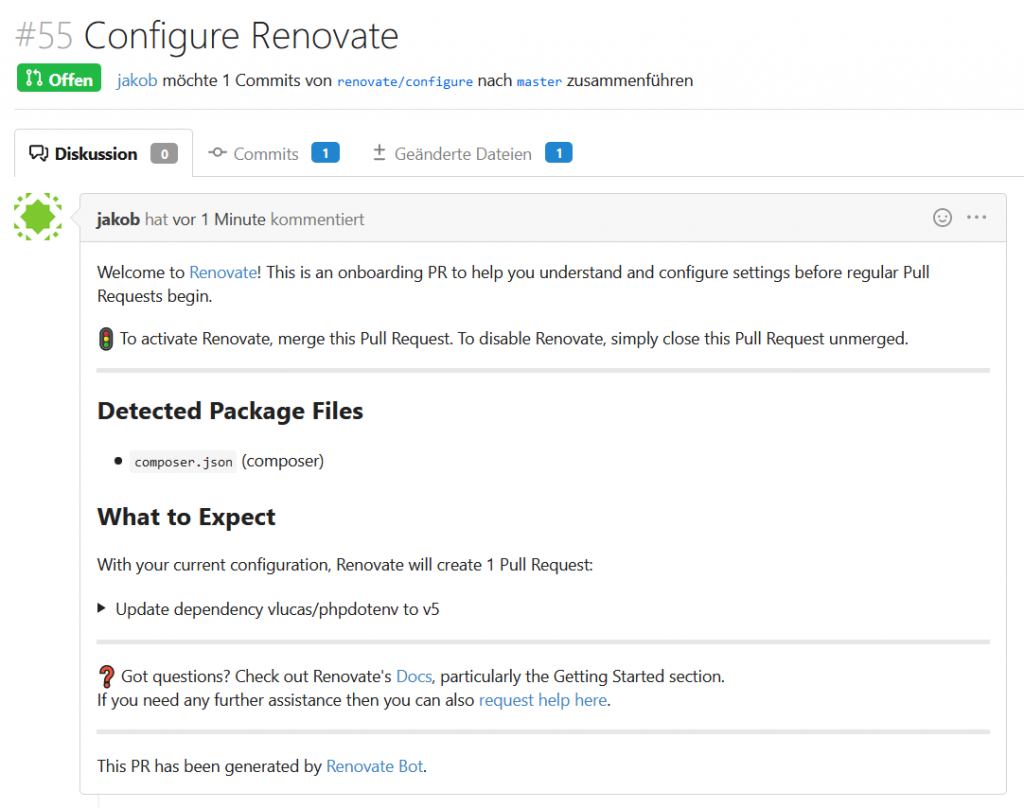

| Review Culture | What (code) review culture does the project have? | Optional reviews without guidelines and unclarity who is actually supposed to give reviews; regular flamewars | Mandatory reviews with defined reviewers; defined guidelines; flamewars happen seldom | Tools conduct essential parts of review (code style, consistency); barely any flamewars | | | |

|---|

| Communication | | | | | | | |

|---|

| Release Cycle Duration | Time needed from last functional contribution until release is officially delivered | months | weeks | days | hours | minutes | |

|---|

| Delayed deliveries | How often do delayed contributions lead to special activities during a release cycle | Every time | Often (>50%) | Seldom (<25%) | Rarely (<10%) | Never | |

|---|

| Release timeliness | How often are releases not coming in time | Often (>50%) | Seldom (<25%) | Rarely (<10%) | Never | No release timelines needed | |

|---|

| Release scope | Are given timelines determining the planned scope for the next release (excluding catastrophic surprise events) | Planned scope is mostly considered unfeasible; priority discussions are ongoing at any time | 80% of planned scope seems feasible; priority discussions are coming up during the implementation cycle repeatedly | 100% of planned scope usually is feasible | Planned scope doesnt fill available capacity, leaving room for refactoring, technical debt reduction | There is no scope from outside the dev team defined, things are delivered when they are done (team is trusted) | |

|---|

| Release artifact collection | How are release artifacts gathered, combined, put together | Everything manual | Some SW manual, some SW automatic | all SW automatic, documentation manual | SW + documentation automatic | – | |

|---|

| Tracability | Consistency between configurations, requirements, architecture, code, test cases and deliveries | No consistency/tracability targeted | Incomplete manual activities | Mostly ensured by tools | Untracable/inconsistent elements are syntactically not possible | Untracable/inconsistent elements are semantically not possible | |

|---|

| Delivery | How is delivery happening? | Ad-hoc without appropriate tooling (mail, usb stick, network drives) | Systematic with appriopriate tooling (artifactory, MIC) | Automatic delivery from development to customer with manual trigger | Automatic delivery for every release candidate | | |

|---|

| Ad-hoc customer/management demoability | | | | | | | |

|---|

| Feature Toggles | | | | | | | |

|---|

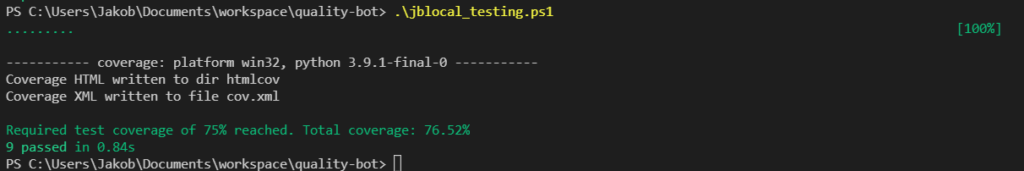

| Test automation | | No or a bit of exploratory testing | Test plan is executed by human testers for all test activities | All test activities except for special UX testing are automated | No contributions can avoid passing the obligatory automated tests | Aautomatically deriving new test cases | |

|---|

| Test activities | | A bit of exploratory testing | Manual, systematic black box testing | Static code analysisUnit TestingIntegration TestingSystem Testing | E2E Acceptance Testing | Chaos engineering, Fuzzing and Mutation testing | |

|---|

| Virtual Targets | | | | | | | |

|---|

| Quality Criteria | | SW Quality management does not exist/not known/not clear | SW Quality management is a side activity and first standards are „in progress“ | SW quality is someone’s 100% occupation and a quality standard exists | SW quality is measured every day | SW quality measured gaps are actively closed with highest prio | |

|---|

| Regressions | How often do regressions occur (regression = loss of once working functionality) | Regressions happen with every release | Regressions happen often (>50%) | Regressions happen seldom (<25%) and are known before the release is delivered | Regressions happen rarely (<10%) and are known before the released software is assembled | | |

|---|

| Reporting | Videowall, dashboards, KPIs | No or ever changing KPIs | Defined KPIs, manually measured | Some KPIs are automatically measured, but its meaningfulness is debated, therefore doesnt play a huge role in decision-making | Automatically measured and live displayed KPI data, used as significant input by decision-makers | Live data KPIs are main source of planning and priorization | |

|---|

| A/B Testing | | | | | | | |

|---|

| Release Candidates | How often are release candidates made available | No release candidates existing | Before a release, release candidates are regularly identified and shared | Daily release candidates | Every change leads to a release candidate | | |

|---|

| Master branch | When do developers contribute to master? | Irregularly, feature branches are alive for weeks or more | Regularly, feature branches exist for days | Changes are usually merged to master every day | | | |

|---|

| DevOps | Are pipelines managed by the software developers or not? | Pipelines are (almost) exclusively managed by dev-team-external staff | Pipelines are maintained by the developers; new capabilities are added by externals | Pipelines are maintained and improved by the developers in almost all cases | Pipelines are maintained and improved by the developers in all cases | | |

|---|

| SW Configuration Management | How are product variants and generations managed? | No systematic variant management, some variants are just ignored yet | systematic manual management of variants, variants are partially covered by branches | All variants are managed via configurations and pipelines on same branch | Software is reused over generations and variants | | |

|---|

| Reproducability | | | | | | | |

|---|

| Customer Feedback | Customers can be internal, too; not only end-users | Customer not known/existing, no customer feedback available | Internal proxy customer, providing feedback which doesn’t play a big role | External customer is available and his/her feedback plays a relevant role | End-users‘ feedback is available to the developers and a relevant input for design, planning and priorization | End-users‘ feedback is main input for design, planning and priorization | |

|---|

| Heartbeat | Is a regular heartbeat existing? | No regular heartbeat (e.g. time between reelases) | Regular heartbeat, but often exceptions happen | Regular heartbeat without exceptions | | | |

|---|

| IT reliability | | SW Factory is regularly breaking; developers have local alternatives in place to not get blocked | SW Factory is often broken | .. | … | | |

|---|

| Developer Feedback | How quickly do developers get (latest) feedback from respective test activities | Months after the respective implementation | weeks after the respective implementation | days after the respective implementation | hours after the respective implementation | minutes after the respective implementation | |

|---|

| DevSecOps Stuff | | | | | | |

|---|

| Security Scanning | | | | | | | |

|---|

| ISO26262? | | | | | | |

|---|